Welcome to the friendly guide on “Data Cleaning and Validation,” where you’ll discover the essential steps to ensure your data is accurate and reliable. In this article, you’ll learn how to identify and correct errors, fill in missing information, and validate your data to make it useful for analysis. By mastering these techniques, you can enhance the quality of your data and make better-informed decisions. Get ready to dive into the fascinating world of data cleaning and validation!

Have you ever looked at a dataset and wondered where to even begin to make sense of the mess in front of you? You’re not alone. Many people find themselves overwhelmed by data, especially when it’s unclean, unorganized, or downright misleading. This is where data cleaning and validation come in. It’s not just about tidying up; it’s about making your data reliable, accurate, and useful.

Table of Contents

What is Data Cleaning?

Data cleaning, sometimes referred to as data cleansing or data scrubbing, is a crucial process in data management. It involves detecting and correcting (or removing) corrupt or inaccurate records from a dataset. The primary objective is to ensure that your data is accurate, consistent, and reliable, thereby making it suitable for analysis.

Why Is Data Cleaning Important?

Imagine if a chef used spoiled ingredients to prepare a meal. No matter how skilled the chef is, the end dish would be nothing short of a disaster. In a similar fashion, the quality of your data affects the quality of your analysis and the insights you derive from it. Clean data delivers more accurate results, leading to better decision-making.

Common Issues in Data Cleaning

Here are some of the most common issues you might encounter:

- Missing Values: Empty fields that can skew results.

- Inconsistent Data: Data that doesn’t follow a consistent format.

- Duplicate Records: Multiple records that represent the same entity.

- Outliers: Data points that are significantly different from others.

- Typographical Errors: Spelling or format mistakes in the data entries.

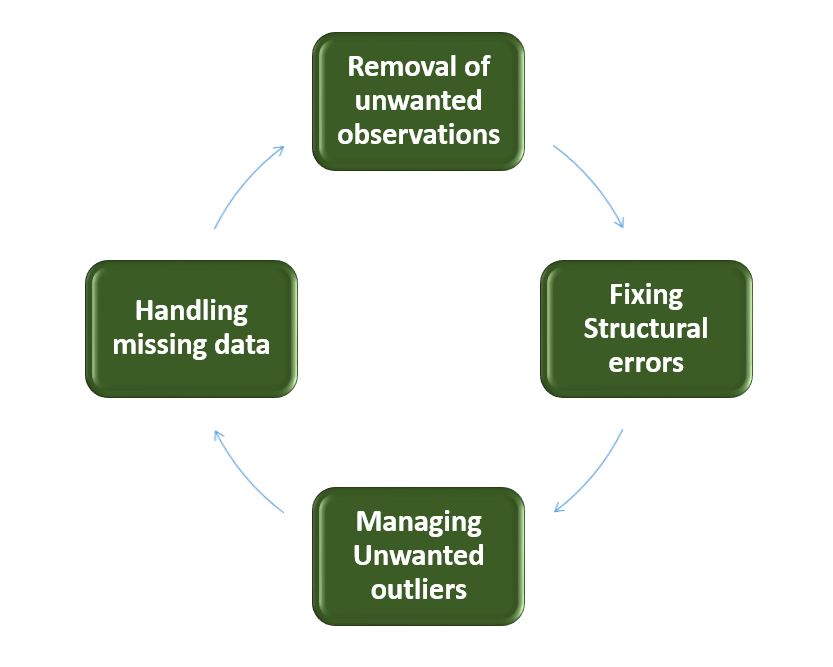

Steps in Data Cleaning

Step 1: Importing Data

The first step is to import your data, which could be in various formats like CSV, JSON, or even SQL databases.

Step 2: Examine Data

Take a look at your dataset to understand its structure, the range of values, and the anomalies. You can use tools like Pandas in Python or libraries in R for this initial examination.

Step 3: Handle Missing Data

Decide how to handle missing data. You can either remove the rows with missing values, fill them with a default value, or use other imputation methods.

Step 4: Remove Duplicates

Check for duplicate entries and remove them to ensure each record is unique.

Step 5: Correct Errors

Identify and correct typographical errors, inconsistent formats, and other inaccuracies.

Step 6: Standardize Data

Normalize your data so it adheres to a single standard format. This is important for consistent analysis.

Step 7: Validate Data

Validate your data to ensure its accuracy and integrity.

Techniques for Handling Missing Data

Handling missing data is a critical part of data cleaning. Here are some techniques:

Deleting Rows or Columns

If the missing values are insignificant, you might opt to delete the rows or columns containing them.

Imputation

Imputation is the process of replacing missing values with substituted ones. Common methods include:

- Mean/Median Imputation: Replacing missing values with the mean or median of the column.

- Mode Imputation: For categorical data, replace missing values with the mode.

- Predictive Modeling: Use algorithms to predict the missing values based on other available data.

Using Indicators

Create an indicator variable to flag data points that initially had missing values.

Data Validation

Once your data is clean, the next step is data validation. This is the process of verifying that your data conforms to the desired accuracy and quality.

Types of Validation

Data can be subject to various types of validation checks:

- Range Validity: Ensures values fall within a specific range.

- Format Validity: Ensures data follows a specific format, like email addresses or phone numbers.

- Consistency Checks: Ensures data is consistent across different datasets.

- Uniqueness Checks: Verifies that data entries are unique.

- Completeness Checks: Ensures no required data is missing.

Techniques for Data Validation

Depending on the type of data and the framework you’re working in, there are several techniques for data validation:

Manual Validation

While manual validation is time-consuming, it’s a surefire way to ensure data quality. Reviewing data entries individually can catch inconsistencies and errors.

Programmatic Validation

Using scripts and programs to validate data can save a lot of time. Libraries in Python like Pandas, Data-Strength, and Cerberus offer pre-built functionalities for this purpose.

Validation Rules

You can set up data validation rules using software platforms and data management tools. These rules automatically check for errors and inconsistencies each time data is entered or modified.

Tools for Data Cleaning and Validation

Thankfully, you don’t have to clean and validate your data manually. There are many tools available to help streamline these processes.

OpenRefine

OpenRefine is an open-source tool specifically designed for cleaning messy data. It allows you to explore large datasets with ease and even supports complex data transformations.

Trifacta

Trifacta is a more advanced, commercial data wrangling tool. It’s well-suited for larger organizations and offers a range of features for cleansing and validating data.

Pandas in Python

If you’re familiar with Python, Pandas is an excellent library for data manipulation and cleaning. It provides robust functionality for merging datasets, handling missing data, and more.

Tableau Prep

For those more inclined toward visual work, Tableau Prep offers a user-friendly interface to visualize and clean your data.

Talend

Talend is another powerful tool for data integration that also includes features for cleaning and validating data.

Real-world Applications

Data cleaning and validation have a wide variety of applications across different domains. Let’s look at a few examples:

Healthcare

In healthcare, clean and validated data can improve patient care by ensuring accurate patient records, tracking treatments, and complying with regulations.

E-commerce

For e-commerce platforms, clean data is crucial for understanding customer behavior, managing inventory, and personalizing shopping experiences.

Academia

Academic researchers rely on clean data to derive accurate insights from their studies. Validated data ensures the credibility of their research findings.

Finance

Financial analysts depend on clean and validated data for forecasting, risk management, and compliance with financial regulations.

Challenges in Data Cleaning and Validation

Despite the tools and techniques available, data cleaning and validation come with their fair share of challenges.

Volume of Data

With big data, the sheer volume of data can make cleaning and validation a cumbersome process.

Complexity

Data often comes from different sources, each with its own format and characteristics, adding layers of complexity to the cleaning process.

Costs

Investing in commercial tools or dedicating resources to develop in-house solutions can be expensive.

Time

Data cleaning and validation are time-consuming tasks. Sometimes, the urgency to derive insights may lead to cutting corners, thereby compromising data quality.

Best Practices for Data Cleaning and Validation

To make the process as efficient and effective as possible, here are some best practices:

Document Your Process

Keep a detailed record of your data cleaning and validation steps. This will not only help in replicating the process but also in troubleshooting any issues that arise later.

Automate

Wherever possible, automate the cleaning and validation process using scripts or tools. This will save you time and reduce the risk of human error.

Use Checkpoints

Incorporate checkpoints in your process where the data is validated before moving to the next step. This way, you catch errors early on.

Continuous Monitoring

Data cleaning and validation should not be one-time tasks. Implement continuous monitoring to maintain data quality over time.

Involve Stakeholders

Make sure all relevant stakeholders understand the importance of data quality and are involved in the validation process. Their input can be invaluable.

Train the Team

Ensure that your team is well-trained in using data cleaning and validation tools and techniques. This includes staying updated with new methods and technologies.

Conclusion

Data cleaning and validation are essential steps in ensuring that your data is accurate, consistent, and ready for analysis. Without these steps, any insights derived from your data could be misleading or downright wrong. By following the best practices and utilizing the right tools, you can tackle the most common data issues effectively. So, roll up your sleeves, dive into that messy dataset, and transform it into a goldmine of reliable insights!